MySQL数据库优化的一些笔记

0. 索引很重要

之前列举记录用了下面的语句。state字段为索引。

- SELECT * FROM feed_urls WHERE state='ok' AND feed_url<>'' LIMIT N,10

当记录数量很大时,有几万之后,这句SQL就很慢了。主要是因为feed_url没有建立索引。后来的解决方法是,把feed_url为空的,设为一个ok以外的state值,就行了。

1、索引不是万能的

为了计算记录总数,下面的语句会很慢。

- mysql> SELECT COUNT(*) FROM feed_urls WHERE state='error';

- +----------+

- | COUNT(*) |

- +----------+

- | 30715 |

- +----------+

- 1 row in set (0.14 sec)

- mysql> EXPLAIN SELECT COUNT(*) FROM feed_urls WHERE state='error'\G

- *************************** 1. row ***************************

- id: 1

- select_type: SIMPLE

- table: feed_urls

- type: ref

- possible_keys: state,page_index

- key: page_index

- key_len: 10

- ref: const

- rows: 25936

- Extra: Using where; Using index

- 1 row in set (0.00 sec)

state为索引,请求用时140ms。遍历了state='error'索引下的每一条记录。

- mysql> SELECT state,COUNT(*) FROM feed_urls GROUP BY state;

- +----------+----------+

- | state | COUNT(*) |

- +----------+----------+

- | error | 30717 |

- | fetching | 8 |

- | nofeed | 76461 |

- | ok | 74703 |

- | queued | 249681 |

- +----------+----------+

- 5 rows in set (0.55 sec)

- mysql> EXPLAIN SELECT state,COUNT(*) FROM feed_urls GROUP BY state\G

- *************************** 1. row ***************************

- id: 1

- select_type: SIMPLE

- table: feed_urls

- type: index

- possible_keys: NULL

- key: state

- key_len: 10

- ref: NULL

- rows: 431618

- Extra: Using index

- 1 row in set (0.00 sec)

请求用时550ms。遍历了每个state下的每一条记录。

改进方法:

独立一个表用来计数,使用MySQL的Trigger同步计数:

- CREATE TRIGGER my_trigger AFTER UPDATE ON feed_urls

- FOR EACH ROW BEGIN

- IF OLD.state <> NEW.state THEN

- IF NEW.state='ok' THEN

- UPDATE feed_stat SET count_feed = count_feed + 1;

- END IF;

- IF NEW.state IN ('ok', 'error', 'nofeed') THEN

- UPDATE feed_stat SET count_access = count_access + 1;

- END IF;

- END IF;

- END

2. 当分页很大时

- mysql> SELECT * FROM feed_urls LIMIT 230000, 1\G

- *************************** 1. row ***************************

- id: 736841f82abb0bc87ccfec7c0fdbd09c30b5a24d

- link: http://mappemunde.typepad.com/

- title: Tim Peterson

- feed_url: NULL

- update_time: 2012-05-12 11:01:56

- state: queued

- http_server: NULL

- abstract: NULL

- previous_id: ceea30e0ba609b69198c53ce71c44070d69038c5

- ref_count: 1

- error: NULL

- aid: 230001

- 1 row in set (0.50 sec)

- mysql> EXPLAIN SELECT * FROM feed_urls LIMIT 230000, 1\G

- *************************** 1. row ***************************

- id: 1

- select_type: SIMPLE

- table: feed_urls

- type: ALL

- possible_keys: NULL

- key: NULL

- key_len: NULL

- ref: NULL

- rows: 431751

- Extra:

- 1 row in set (0.00 sec)

读取一条记录,耗时500ms,因为表记录是变长的,所以MySQL不能算出目标位置,只能每一条记录的数过去。

改进方法:

通过索引定位,数索引比数记录要快,因为索引占用的空间比整条记录小很多。

- mysql> SELECT * FROM (SELECT aid FROM feed_urls ORDER BY aid LIMIT 215000, 1) d JOIN feed_urls u ON d.aid=u.aid\G

- *************************** 1. row ***************************

- aid: 215001

- id: 2e4b1a385c8aae40b3ec2af9153805ca446f2029

- link: http://ncse.com/

- title: NCSE

- feed_url: NULL

- update_time: 2012-05-12 10:47:15

- state: queued

- http_server: NULL

- abstract: NULL

- previous_id: 819a6e3c5edc1624a9b8f171d8d3ae269843785f

- ref_count: 3

- error: NULL

- aid: 215001

- 1 row in set (0.06 sec)

- mysql> EXPLAIN SELECT * FROM (SELECT aid FROM feed_urls ORDER BY aid LIMIT 215000, 1) d JOIN feed_urls u ON d.aid=u.aid\G

- *************************** 1. row ***************************

- id: 1

- select_type: PRIMARY

- table:type: system

- possible_keys: NULL

- key: NULL

- key_len: NULL

- ref: NULL

- rows: 1

- Extra:

- *************************** 2. row ***************************

- id: 1

- select_type: PRIMARY

- table: u

- type: const

- possible_keys: aid

- key: aid

- key_len: 4

- ref: const

- rows: 1

- Extra:

- *************************** 3. row ***************************

- id: 2

- select_type: DERIVED

- table: feed_urls

- type: index

- possible_keys: NULL

- key: aid

- key_len: 4

- ref: NULL

- rows: 211001

- Extra: Using index

- 3 rows in set (0.15 sec)

耗时60ms,比之前的方法快了将近10倍。如果LIMIT语句里还有WHERE a=1,应该建立一个(a,aid)的索引。

话说,MySQL好像还是不能直接算出第21500条索引的位置呀,这种方法还是数了索引了,能算出来就直接0ms了。不过这样的效率,对于百万级的,还能应付吧。如果是千万级的或者像我之前在KS创建的一张上亿条记录的表(120G),这种方法就肯定不行了。

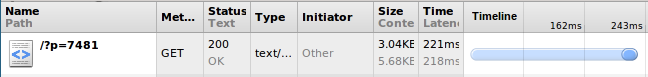

经过上述优化,打开最后一页的速度已经很快了(之前需要800ms,现在则为300ms左右)。

膜拜下这Burst.NET最低档次的VPS (30RMB/month)。

- root@xiaoxia-pc:~/# ping feed.readself.com -n

- PING app.readself.com (184.82.185.32) 56(84) bytes of data.

- 64 bytes from 184.82.185.32: icmp_req=1 ttl=45 time=161 ms

- 64 bytes from 184.82.185.32: icmp_req=2 ttl=45 time=161 ms

- 64 bytes from 184.82.185.32: icmp_req=3 ttl=45 time=161 ms

用同样的方法,优化了搜索引擎的排名算法。即排名过程中选取尽量少的值出来排序,排序后再JOIN一次获取结果的信息。

排序过程如下:

- SELECT u.*, count_level(u.id) lv

- FROM(

- SELECT f.id, f.ref_count, MATCH(i.link,i.title) AGAINST (keywords) score

- FROM feed_index i

- JOIN feed_urls f ON f.id=i.id

- WHERE MATCH(i.link,i.title) AGAINST (keywords)

- ORDER BY score*0.5 + score*0.5*(ref_count/max_ref_count_in_result) DESC

- LIMIT offset,10

- ) d JOIN feed_urls u ON u.id = d.id

目前处理10万记录的全文索引数据,MySQL还是可以满足的,就是不知道上百万之后,还能不能撑下去。撑不下去就依赖第三方的工具了,例如Sphinx ![]()

3. SELECT里的函数

给FeedDB增加了层次的显示。因为本人太懒,所以没有给数据库表增加一个记录深度的字段。所以,直接写了一个MySQL的自定义函数 count_level,用来统计通过parent_id一直找到顶层经过的路径长度(Level)。

- CREATE DEFINER=`feeddb_rw`@`%` FUNCTION `count_level`(fid char(40)) RETURNS int(11)

- BEGIN

- SET @levels = 0;

- SET @found = false;

- WHILE NOT @found DO

- SELECT previous_id INTO @prev_id FROM feed_urls WHERE id=fid;

- IF @prev_id is null OR @prev_id = '' THEN

- SET @found = true;

- ELSE

- SET @levels = @levels + 1;

- SET fid = @prev_id;

- END IF;

- END WHILE;

- IF @prev_id is null THEN

- RETURN null;

- END IF;

- RETURN @levels;

- END

在网页显示的时候用了类似下面的SQL语句。

- mysql> SELECT u.*, count_level(u.id) FROM feed_urls u ORDER BY ref_count DESC LIMIT 12000,1\G

- *************************** 1. row ***************************

- id: e42f44b04dabbb9789ccb4709278e881c54c28a3

- link: http://tetellita.blogspot.com/

- title: le hamburger et le croissant

- feed_url: http://www.blogger.com/feeds/7360650/posts/default

- update_time: 2012-05-15 14:50:53

- state: ok

- http_server: GSE

- abstract: Lepekmezest un épais sirop bordeaux obtenu par réduction dumoût de raisin, une sorte de mélasse de raisin, en somme. Légèrement acidulé, il apporte du pep's aux yaourts et nappe avec bonheur les

- previous_id: 129cabd96e7099a53b78c7ddeff98658351082e9

- ref_count: 9

- error: NULL

- aid: 174262

- count_level(u.id): 8

- 1 row in set (4.10 sec)

好吧,悲剧了!4100ms。一定对12000个条目都算了一次count_level,然后再进行排序。所以才用上了4秒那么漫长的时间!!!

改进方法:

先SELECT LIMIT,再在派生的临时表里,计算count_level。

- mysql> SELECT u.*, count_level(u.id) FROM (

- SELECT id FROM feed_urls ORDER BY ref_count DESC LIMIT 27521,1

- ) d JOIN feed_urls u ON u.id=d.id\G

- *************************** 1. row ***************************

- id: 61df288dda131ffd6125452d20ad0648f38abafd

- link: http://mynokiamobile.org/

- title: My Nokia Mobile

- feed_url: http://mynokiamobile.org/feed/

- update_time: 2012-05-14 14:06:57

- state: ok

- http_server: Apache/2.2.19 (Unix) mod_ssl/2.2.19 OpenSSL/1.0.0-fips mod_auth_passthrough/2.1 mod_bwlimited/1.4 FrontPage/5.0.2.2635

- abstract: ArchivesSelect MonthMay 2012April 2012March 2012February 2012January 2012December 2011November 2011October 2011September 2011August 2011July 2011June 2011May 2011April 2011March 2011February 2011Janua

- previous_id: f37af92bb89c08f6d4b69e72eab05d8ab1e2aca4

- ref_count: 5

- error: NULL

- aid: 154996

- count_level(u.id): 8

- 1 row in set (0.09 sec)

如此,优化之后效果好很多了!但是还可以继续优化,例如建立一个字段存储Level的值应该是最好的办法了。

- 没有章节